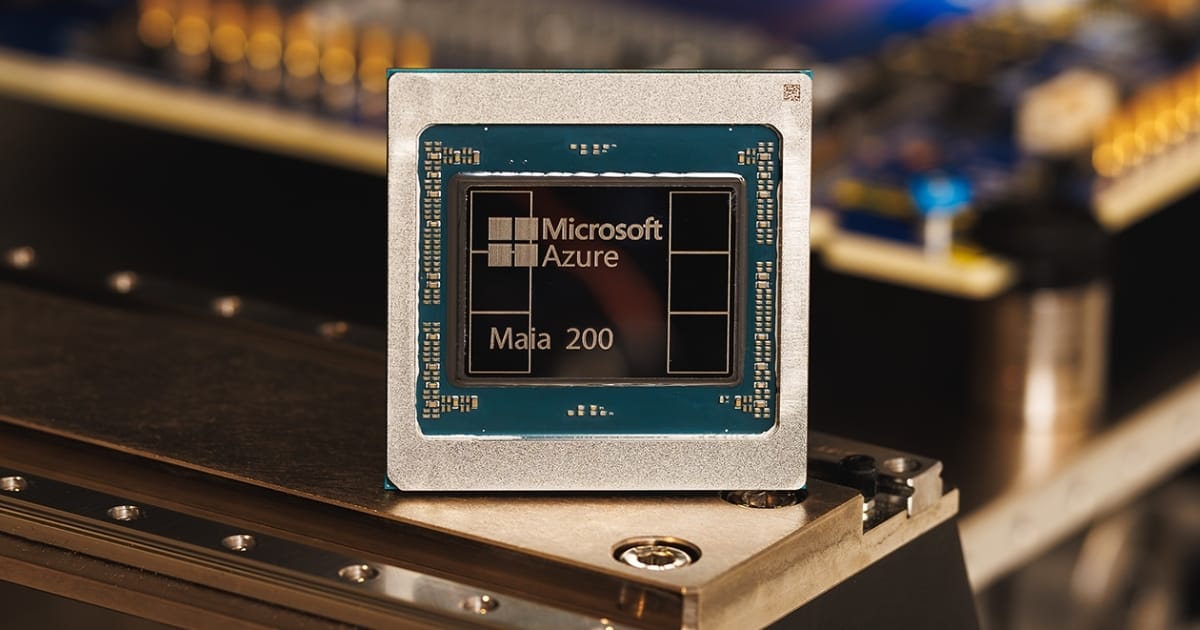

Microsoft has introduced Maia 200, an AI inference accelerator designed to cut the cost and increase the speed of running large language models (LLMs) like GPT-5.2. To read about the technical specs of Maia 200, click here.

What’s happening: Maia 200 is deployed in Microsoft datacenters in the U.S., integrated with Azure and Microsoft 365 Copilot. Developers can now preview the Maia SDK, which offers PyTorch integration, Triton compilation, and low-level programming for workload optimization

Market context: The Maia 200 launch comes amid a broader push from hyperscalers to optimize infrastructure specifically for AI inference. Recently, Google previewed its next-generation TPU v8 for large-scale AI workloads, and Amazon highlighted expanded availability of Trainium 3 for inference at scale.

Microsoft’s timing signals that hyperscalers are aggressively moving to drive down AI inference costs while differentiating their cloud offerings, as demand from enterprises, startups, and AI-first applications continues to accelerate.

Why it matters:

Cost efficiency: Microsoft claims Maia 200 improves performance per dollar by ~30% compared to its previous generation hardware.

Faster deployment: The end-to-end design—from chip to rack to software—lets Microsoft run models on Maia 200 within days of first silicon, cutting infrastructure deployment time in half.

Scalable inference: Its two-tier Ethernet-based scale-up network and direct interconnects allow clusters of over 6,000 accelerators to run efficiently, reducing stranded capacity and energy use.

Enterprise signal: This launch shows hyperscalers are investing heavily in inference-optimized infrastructure, not just general-purpose GPUs, to support generative AI workloads at scale.

What’s next: Enterprises should read this as a signal that hyperscalers will continue pushing specialized AI infrastructure to reduce costs and improve performance for AI-driven applications. As the market heats up, end-users should expect faster, cheaper cloud AI services and increasing pressure on teams to leverage cloud-native AI for real-time analytics, data generation, and model inference.

—

Uplink provides news for those who build, run, and care about networks. Every week, we break down the moves, mergers, and technologies shaping the enterprise networking industry, so you know what matters and why.